And some legs. The front, middle, and rear leg pairs are all slightly different structurally, so I will be duplicating the front legs and tweaking the shapes to fit my reference images.

Would you believe I'm still working on my book cover? Turns out xgen for maya isn't tremendously user friendly or intuitive or stable at this point. I'm also moving this week... so I'd really love to get it down. We'll see. Turns out I'm not willing to compromise on the book cover, nor publish with a placeholder. Nor was I able to perform a quick and elegant execution. So, as with so many indie developers, it'll be released when it's done. My hope is that although the world isn't awaiting this release with bated breat, some of this delay may increase excitement? I'm not sure.

Later,

Stuart

Monday, December 15, 2014

Friday, December 12, 2014

Ant modelling

This is the real version of the ant model. The anatomy is much more accurate (I hope) than last time. Note the five segmented gaster, the lack of separation between scape insertion and clypeus, the emarginate thorax, and the divergent frontal carinae. Any guesses as to the species? I might have already mentioned it.

Still lots of work to be done, but I'm learning a lot about sculpting and ant anatomy, that's for sure.

As for my book, well the formatting is complete, and I'm working on the cover, so it should be published this weekend.

Later,

Stuart

Still lots of work to be done, but I'm learning a lot about sculpting and ant anatomy, that's for sure.

As for my book, well the formatting is complete, and I'm working on the cover, so it should be published this weekend.

Later,

Stuart

Monday, December 1, 2014

Non-repeating noise in maya

I'm creating a large surface that I want to have very fine noise. I made a fractal texture and got a style of noise I was fairly happy with. However, to get the scale right, I needed to increase the repeatU and repeatV a ton, around 800. This creates obvious and ugly repeats.

A quick google search led me to this blog post: http://www.sigillarium.com/blog/lang/en/318/

The author describes using two animated fractals (animated just to get two different values) as input to the translateU and translateV of the original fractal. I tried a couple variations of this, but the biggest problem I found was that it destroyed the integrity (shapes) of the original fractal I created. Sometimes it made weird swirly noise, other times it just created tiny random noise.

After thinking about it a bit, I decided to pipe the outAlpha of a simple fractal into the time attribute of my crafted fractal with animated checked on. This resulted in a variation of the evolution of the fractal over the surface and really reduced any noticeable repetition, while largely maintaining the "structure" of the original fractal, because it is just evolving different parts of the surface to different times.

Hope that helps someone out and thanks to Sigillarium for the foundation of the idea.

Later,

Stuart

A quick google search led me to this blog post: http://www.sigillarium.com/blog/lang/en/318/

The author describes using two animated fractals (animated just to get two different values) as input to the translateU and translateV of the original fractal. I tried a couple variations of this, but the biggest problem I found was that it destroyed the integrity (shapes) of the original fractal I created. Sometimes it made weird swirly noise, other times it just created tiny random noise.

After thinking about it a bit, I decided to pipe the outAlpha of a simple fractal into the time attribute of my crafted fractal with animated checked on. This resulted in a variation of the evolution of the fractal over the surface and really reduced any noticeable repetition, while largely maintaining the "structure" of the original fractal, because it is just evolving different parts of the surface to different times.

Hope that helps someone out and thanks to Sigillarium for the foundation of the idea.

Later,

Stuart

Sunday, November 30, 2014

Severing a Dendrite - Update

Well the end of November is here and my book is as finished as it will get. At least the words are. When I uploaded to Kindle Direct Publishing, several formatting issues became apparent and I need to work those out before I release it. I also am working on a cover that will take more time to completion.

I intended to wrap that all up this weekend, but other things got in the way. I'm performing in a play this Saturday, and that consumed much of these final two days of November. So I pray your patience a little longer before this thing will be out the door. Honestly this delay is probably bothering me more than anyone else, but I felt an update was still in order.

Later,

Stuart

I intended to wrap that all up this weekend, but other things got in the way. I'm performing in a play this Saturday, and that consumed much of these final two days of November. So I pray your patience a little longer before this thing will be out the door. Honestly this delay is probably bothering me more than anyone else, but I felt an update was still in order.

Later,

Stuart

Thursday, November 27, 2014

Ant Practice

Some more work on F. polyctena, based on the Eric Keller tut I mentioned in the previous post. Again, this is a practice run through to learn ZBrush and the workflow. I'm expecting to do a more accurate and careful model for the real thing, which, even though I'm paying more attention to the morphology, should go faster, now that I'm getting more comfortable with the program.

Retopologizing the dynamesh down to a cleaner mesh.

Adding some interesting detail.

Painting using photographs of the ant as stencils.

I am also getting quite close to finished my book, in a process I'm calling amateur copy-editing. Just a couple chapters left. So the book should be available Nov 30 as promised. I'm hoping to create a nice cover, but time is short. It remains to be seen whether I will compromise on the cover for the sake of time, put up a placeholder for the time being, or execute with aplomb.

Later,

Stuart

Retopologizing the dynamesh down to a cleaner mesh.

Adding some interesting detail.

Painting using photographs of the ant as stencils.

I am also getting quite close to finished my book, in a process I'm calling amateur copy-editing. Just a couple chapters left. So the book should be available Nov 30 as promised. I'm hoping to create a nice cover, but time is short. It remains to be seen whether I will compromise on the cover for the sake of time, put up a placeholder for the time being, or execute with aplomb.

Later,

Stuart

Thursday, November 20, 2014

Ant WIP

As part of my research at the UofT, we're designing an experiment to look at how students learn complex motion through animations. The complex motion in this case is an ant walking, so as a part of that, I'm creating an ant model for the animation(s).

This is my first attempt. There are anatomical problems, and it's not tremendously accurate. I'm mostly using it as a practice run as I learn how to use ZBrush better. I'm going through Eric Keller's Hyper-real Insect Design tutorial. I finally feel like I'm getting a solid handle on how to use ZBrush, even managing to troubleshoot some strange issues that I've run into.

In other news, I'm about 2/3 through "editing" my book, so I'm hopeful I'll be able to release it as promised on Nov 30.

Later,

Stuart

This is my first attempt. There are anatomical problems, and it's not tremendously accurate. I'm mostly using it as a practice run as I learn how to use ZBrush better. I'm going through Eric Keller's Hyper-real Insect Design tutorial. I finally feel like I'm getting a solid handle on how to use ZBrush, even managing to troubleshoot some strange issues that I've run into.

In other news, I'm about 2/3 through "editing" my book, so I'm hopeful I'll be able to release it as promised on Nov 30.

Later,

Stuart

Saturday, November 1, 2014

NaNoWriMo Revisited - First chapter and release date

If you are a long time reader of this blog, you may remember that way back, I participated in NaNoWriMo (National Novel Writing Month). This adventure is chronicled here, here and here. All of the links and widgets are now broken, but you can visit the NaNoWriMo site to get an idea of the process. Today is the right time to start if you want to try it yourself.

I've written more explanation in the foreword of the book, but the brief-ish (brie-fish? mmm) story is this: I wrote the book in November 2009, saved it, and closed it. I meant to go back and edit it or at least read it, because I quite enjoyed the writing process, but it wasn't until this year that I read it in its entirety for the first time. I've decided I don't want to spend the time on a really deep edit and reworking, because other projects are more pressing and relevant to my career goals, but I do want people to get a chance to check it out. So I've just been doing some basic formatting, typo correction, few tweaks, grammar, that sort of thing.

I'm announcing that I will be releasing my debut novel "Severing a Dendrite" via Kindle Direct Publishing on November 30th! The minimum allowed price for that platform (with 70% royalty) is $2.99, so that's what it'll be. But just to pique your interest and hopefully get you to throw the three dollars at Amazon so they can throw two dollars at me, I'll be sharing the first chapter now and another several chapters on the release day for free right here on this blog.

Here is the link to download the first chapter in pdf format: http://goo.gl/tBx9Jd

You may have to right click and "save link as..." if just clicking on it doesn't prompt a download.

And if you like clicking on pictures, here's one with the same result, i.e. pdf downloaded.

The text on the pages should be large enough that reading on a mobile device or e-reader will be possible. For reading in Adobe Reader, I would recommend View > Page Display > Two Page View for a more book-like experience. For the final release, I will be sharing a more substantial section of the book (the first five chapters!) for free in kindle and epub formats so you don't have to bother with pdfs, although I think for full compatibility and ease of reading, I will post a pdf of that too.

Hope you enjoy it!

Later,

Stuart

I've written more explanation in the foreword of the book, but the brief-ish (brie-fish? mmm) story is this: I wrote the book in November 2009, saved it, and closed it. I meant to go back and edit it or at least read it, because I quite enjoyed the writing process, but it wasn't until this year that I read it in its entirety for the first time. I've decided I don't want to spend the time on a really deep edit and reworking, because other projects are more pressing and relevant to my career goals, but I do want people to get a chance to check it out. So I've just been doing some basic formatting, typo correction, few tweaks, grammar, that sort of thing.

I'm announcing that I will be releasing my debut novel "Severing a Dendrite" via Kindle Direct Publishing on November 30th! The minimum allowed price for that platform (with 70% royalty) is $2.99, so that's what it'll be. But just to pique your interest and hopefully get you to throw the three dollars at Amazon so they can throw two dollars at me, I'll be sharing the first chapter now and another several chapters on the release day for free right here on this blog.

Here is the link to download the first chapter in pdf format: http://goo.gl/tBx9Jd

You may have to right click and "save link as..." if just clicking on it doesn't prompt a download.

And if you like clicking on pictures, here's one with the same result, i.e. pdf downloaded.

The text on the pages should be large enough that reading on a mobile device or e-reader will be possible. For reading in Adobe Reader, I would recommend View > Page Display > Two Page View for a more book-like experience. For the final release, I will be sharing a more substantial section of the book (the first five chapters!) for free in kindle and epub formats so you don't have to bother with pdfs, although I think for full compatibility and ease of reading, I will post a pdf of that too.

Hope you enjoy it!

Later,

Stuart

Thursday, October 30, 2014

How to Ask Questions on Forums

Preamble:

This article is a set of "best practices" based primarily on my own observations from asking, reading, answering, and not answering questions posted on CG forums. The advice here is written with 3D creative software in mind, but many ideas can be generalized to other topics. Likewise, not every point will be relevant for every query; pick and choose what makes sense. Finally, though the advice is intended to increase the probability of a timely and helpful response, there is no guarantee this will happen. Some questions just don't get answered and it's a good idea to have a backup plan in mind.

The presumption here is that you have run into a problem during your creative endeavors. Perhaps you are following a tutorial and the sequence of steps you are doing doesn't match the instructor's result. Or perhaps you've encountered what looks like a hideous bug, complete with jagged legs and colorless eyes. Or maybe you're trying to produce a particular effect and you just don't know how to go about achieving it. You look around the room, but there's no one nearby to help out. Time to go to the interwebs.

The preparation:

Before you create a post on a forum, it's important to do your due diligence.

1) Do some searching to see if an existing solution can be found. Effective web searching, or "googling" as the kids are calling it these days, is an important topic for another time. The basic idea is to use the right terminology, filter for your software version, and try many searches with different phrases. Most problems have been encountered by someone else before you, and it saves everyone time if you can find an existing solution rather than posting about it again.

2) Try to understand a bit more about your problem. Even if you can't find a direct solution, it can be extremely effective to educate yourself about certain terms and ideas related to your problem (e.g. How does Final Gather actually work? What are "normals"? What part of the software controls motion interpolation between keys?). This will help you use the right words in your forum question and sound more intelligent in the process.

The title:

The title of your post should be concise and informative. It is useless to say "Help please" or "Urgent problem"; these types of titles make you sound as though you aren't willing to make an effort. Likewise "Modelling question" or "Issue with render" are equally uninformative. Try to use terms specific to your problem (e.g. "Geometry doesn't follow skeleton", "Creases in mesh after boolean", or "Jagged shadows in render"). Without even clicking on the topic, an experienced user might already have an idea of what the problem might be and a few possible solutions. Congratulations, you've just hooked someone into helping.

The post:

Above all, be courteous. In most instances, the person who ends up helping you will be doing so with no reward or compensation besides the satisfaction of solving a problem and assisting a fellow user. You may be tearing your hair out and warming up your computer-tossing arm, but take a few breaths and approach this professionally.

Start with a one to two sentence summary of your problem. Again, many forum browsers will be doing so in their spare time, so in the event someone doesn't have time to read a longer message, give them the low-down up front. This should be a slightly fleshed out version of your post title (e.g. "I was using the bridge tool to connect a series of edges and the resulting faces don't appear to have any material"). This raises the importance of correct terminology; make sure you're giving tools and commands the right name and look around to find what words other people use when they face similar problems.

Add a couple of line breaks, because smaller chunks of text are visually more inviting to read than a huge block. Then provide some context for the question. What were you doing when you encountered the problem? A detailed sequence of steps will hopefully allow someone to reproduce the problem. What are you trying to achieve? No one can read your mind, so be clear about the desired outcome (maybe you're after more noise in your render). Are you following a tutorial? If so, add a link or share an image of the pertinent step (if copyright allows). What is your experience level? If you've been using software X and have just switched to Y, another convert might be the best person to understand your situation. If you're a senior lighting TD, people will be less likely to assume a newbie error.

Do include what you have already tried. What do you mean you haven't tried anything? Go away and come back when you have. This way users won't waste your time and theirs by suggesting things you've already attempted. It also proves you're serious about finding the answer.

Don't include how frustrated you are and how you have a deadline this weekend and how this always seems to happen and other editorializing. Everyone's project is important and frantic cries will probably not speed things up.

Generally it is a good idea to include your software version. Include hardware info if and only if you suspect a possible hardware problem. Include operating system info if and only if you suspect an operating system problem. If you are getting an error message, copy and paste the error message word for word exactly.

Include screenshots and other images whenever possible. A picture says—what was it, eight hundred words? This being said, don't add eleven screenshots when two will tell the whole story. If you want to show a problem related to geometry, wireframe on shaded is the easiest to interpret. Figure out in your operating system how to take a screenshot of a region of the screen. This will save you time cropping. If you have to capture the whole screen, take that bad boy into an image editor and crop it to the relevant region. No one wants to open and zoom a 2560x1440 image that has a 200 pixel square region showing your glitchy model. Mark up the image too. Take your virtual red pen and add circles, arrows, and exclamation marks (maybe not the last one) to tell others where to look. Use text in your post to tell us what we're looking at too (Is this the broken texture or the one that was working properly?).

Finally, thank readers for their time and assistance and hit submit. Someone may reply within minutes, hours, days, or perhaps not at all. You've crafted a professional, informative question and now you can't do any more—or can you? While you're waiting for a reply, keep doing your research and experiments to try to solve the problem on your own or at least learn more about it.

The follow-up:

If you don't get any responses within what you feel is a reasonable amount of time, don't "bump" the post or say "Twelve views and no replies? What's the matter with people?" Be patient and keep investigating on your own. If you really feel the thread has been lost among the others, you can add a reply with some new information or other solutions you've attempted.

If you were provided an effective solution, write back to a) say thank you and b) confirm that the suggested solution worked. This will help future readers know what to try. If you were provided a partial solution, write back to say what part worked and what didn't. If you were able to solve the rest on your own, make sure to add the specifics of the complete solution, again for future reference. If the offered solution didn't work, share any new behaviour you're experiencing and, as always, be polite.

Conclusion:

While much of this article may seem like common sense, I hope at least it provided new perspective on composing questions on forums. As a final thought, consider the potential time investment by both parties. If you can get a quick and informative answer, it may save you hours or even days of troubleshooting. On the flip side, another user browsing through a forum likely has many threads available to look through. If they can't understand your problem in a minute or two, they will likely move on and help other people; typically they have nothing invested. Therefore, spend that little bit of extra time to craft your query carefully and it will be rewarded.

Thanks for reading.

Stuart

This article is a set of "best practices" based primarily on my own observations from asking, reading, answering, and not answering questions posted on CG forums. The advice here is written with 3D creative software in mind, but many ideas can be generalized to other topics. Likewise, not every point will be relevant for every query; pick and choose what makes sense. Finally, though the advice is intended to increase the probability of a timely and helpful response, there is no guarantee this will happen. Some questions just don't get answered and it's a good idea to have a backup plan in mind.

The presumption here is that you have run into a problem during your creative endeavors. Perhaps you are following a tutorial and the sequence of steps you are doing doesn't match the instructor's result. Or perhaps you've encountered what looks like a hideous bug, complete with jagged legs and colorless eyes. Or maybe you're trying to produce a particular effect and you just don't know how to go about achieving it. You look around the room, but there's no one nearby to help out. Time to go to the interwebs.

The preparation:

Before you create a post on a forum, it's important to do your due diligence.

1) Do some searching to see if an existing solution can be found. Effective web searching, or "googling" as the kids are calling it these days, is an important topic for another time. The basic idea is to use the right terminology, filter for your software version, and try many searches with different phrases. Most problems have been encountered by someone else before you, and it saves everyone time if you can find an existing solution rather than posting about it again.

2) Try to understand a bit more about your problem. Even if you can't find a direct solution, it can be extremely effective to educate yourself about certain terms and ideas related to your problem (e.g. How does Final Gather actually work? What are "normals"? What part of the software controls motion interpolation between keys?). This will help you use the right words in your forum question and sound more intelligent in the process.

The title:

The title of your post should be concise and informative. It is useless to say "Help please" or "Urgent problem"; these types of titles make you sound as though you aren't willing to make an effort. Likewise "Modelling question" or "Issue with render" are equally uninformative. Try to use terms specific to your problem (e.g. "Geometry doesn't follow skeleton", "Creases in mesh after boolean", or "Jagged shadows in render"). Without even clicking on the topic, an experienced user might already have an idea of what the problem might be and a few possible solutions. Congratulations, you've just hooked someone into helping.

The post:

Above all, be courteous. In most instances, the person who ends up helping you will be doing so with no reward or compensation besides the satisfaction of solving a problem and assisting a fellow user. You may be tearing your hair out and warming up your computer-tossing arm, but take a few breaths and approach this professionally.

Start with a one to two sentence summary of your problem. Again, many forum browsers will be doing so in their spare time, so in the event someone doesn't have time to read a longer message, give them the low-down up front. This should be a slightly fleshed out version of your post title (e.g. "I was using the bridge tool to connect a series of edges and the resulting faces don't appear to have any material"). This raises the importance of correct terminology; make sure you're giving tools and commands the right name and look around to find what words other people use when they face similar problems.

Add a couple of line breaks, because smaller chunks of text are visually more inviting to read than a huge block. Then provide some context for the question. What were you doing when you encountered the problem? A detailed sequence of steps will hopefully allow someone to reproduce the problem. What are you trying to achieve? No one can read your mind, so be clear about the desired outcome (maybe you're after more noise in your render). Are you following a tutorial? If so, add a link or share an image of the pertinent step (if copyright allows). What is your experience level? If you've been using software X and have just switched to Y, another convert might be the best person to understand your situation. If you're a senior lighting TD, people will be less likely to assume a newbie error.

Do include what you have already tried. What do you mean you haven't tried anything? Go away and come back when you have. This way users won't waste your time and theirs by suggesting things you've already attempted. It also proves you're serious about finding the answer.

Don't include how frustrated you are and how you have a deadline this weekend and how this always seems to happen and other editorializing. Everyone's project is important and frantic cries will probably not speed things up.

Generally it is a good idea to include your software version. Include hardware info if and only if you suspect a possible hardware problem. Include operating system info if and only if you suspect an operating system problem. If you are getting an error message, copy and paste the error message word for word exactly.

Include screenshots and other images whenever possible. A picture says—what was it, eight hundred words? This being said, don't add eleven screenshots when two will tell the whole story. If you want to show a problem related to geometry, wireframe on shaded is the easiest to interpret. Figure out in your operating system how to take a screenshot of a region of the screen. This will save you time cropping. If you have to capture the whole screen, take that bad boy into an image editor and crop it to the relevant region. No one wants to open and zoom a 2560x1440 image that has a 200 pixel square region showing your glitchy model. Mark up the image too. Take your virtual red pen and add circles, arrows, and exclamation marks (maybe not the last one) to tell others where to look. Use text in your post to tell us what we're looking at too (Is this the broken texture or the one that was working properly?).

Finally, thank readers for their time and assistance and hit submit. Someone may reply within minutes, hours, days, or perhaps not at all. You've crafted a professional, informative question and now you can't do any more—or can you? While you're waiting for a reply, keep doing your research and experiments to try to solve the problem on your own or at least learn more about it.

The follow-up:

If you don't get any responses within what you feel is a reasonable amount of time, don't "bump" the post or say "Twelve views and no replies? What's the matter with people?" Be patient and keep investigating on your own. If you really feel the thread has been lost among the others, you can add a reply with some new information or other solutions you've attempted.

If you were provided an effective solution, write back to a) say thank you and b) confirm that the suggested solution worked. This will help future readers know what to try. If you were provided a partial solution, write back to say what part worked and what didn't. If you were able to solve the rest on your own, make sure to add the specifics of the complete solution, again for future reference. If the offered solution didn't work, share any new behaviour you're experiencing and, as always, be polite.

Conclusion:

While much of this article may seem like common sense, I hope at least it provided new perspective on composing questions on forums. As a final thought, consider the potential time investment by both parties. If you can get a quick and informative answer, it may save you hours or even days of troubleshooting. On the flip side, another user browsing through a forum likely has many threads available to look through. If they can't understand your problem in a minute or two, they will likely move on and help other people; typically they have nothing invested. Therefore, spend that little bit of extra time to craft your query carefully and it will be rewarded.

Thanks for reading.

Stuart

Tuesday, October 28, 2014

Cover Teaser

I currently have a journal article in review, and if it gets accepted, I can submit an image to be considered for the cover. This, potentially, is a very cool opportunity, so I've been working on an image suitable for the cover and related to the article. I haven't heard back yet about the manuscript, but I couldn't resist sharing a teaser of the image here. Hope you're intrigued.

Later,

Stuart

Later,

Stuart

Sunday, October 26, 2014

The music system

You may have noticed that my blog posts have been rather thin of late. If you haven't, well I'm terribly hurt you haven't been mashing refresh on a daily basis and sighing at the appearance of the post you've already seen 32 times. I have been working on a few things, but the one that has captured my attention most readily and frequently over the past few months has been the idea of a Raspberry Pi based music streaming system. Recently I've made enough progress that I thought I should share what I've been up to and how it's working out so far.

Here's my stereo system. The headphones I got first. I was immediately disappointed to find that I needed more components to really get the audio thing going. So next I got the turntable and receiver from a friend, (thanks Andrea!) and of course speakers. Later I got a CD player. I thought that was pretty good for a Hi-Fi system (incidentally the term WiFi is a play on HiFi, which doesn't make any sense to me... wireless fidelity?).

But what's this new white box on the CD player with a weird brown arch on it?

An aside: Please ignore the creative license in the photography. I was messing around and accidentally stumbled on what I think is a neat 70s film look. No? It looks like an amateur who doesn't know how to use on-camera flash? Fair enough.

Wait, hang on, this white box appears to be made of lego (a collective noun). And the brown stuff is a cleverly constructed headphone-adapter-holder and handle! Handle? Why yes...

The top comes off! And what's inside? Well, if you said a Raspberry Pi, then you are cheating because I told you at the beginning of the post and because you can't see the Raspberry Pi in the below image. What you can actually see is a digital to analog converter (DAC) by IQaudIO that is sitting on top of a Raspberry Pi B+.

At this point I suppose further explanation is in order. Raspberry Pi's are very small computers that are great for many projects like these. In this instance a Pi can be used with a custom operating system to play music. Here's how this works. I have ripped many of my CDs (as lossless FLAC files) to my network attached storage (essentially hard drives that I also use for backup). The Pi has a wireless dongle so it can access the NAS (pictured here) through the local network.

The operating system manages the music playback, queuing, and playlist creation etc. Then it sends the digital signal to the DAC which creates a beautiful analog signal for the receiver to amplify and send to the speakers. CD quality music (which, though a contentious topic, for my ears is as a good as it gets) is wonderfully reproduced. It is delightful to listen to and the Acrylonitrile Butadiene Styrene in the lego infuses a rich mellowness to the digital conversion. I brushed the base of it with olive oil and that has also made a tremendous impact on the midrange frequencies throughout the burn-in process. Okay, I seem to have gotten slightly off track in my subtle digs at certain constituents of the audiophile community.

Here's where it gets really fun. The OS in question is called RuneAudio and I can access the music player from any mobile device or computer. I've even generated a QR code for the URL used to access the webUI. This is not that critical, since it's a simple URL (http://runeaudio) but maybe friends coming over will get a kick out of scanning the printed QR code and choosing some music.

Note that this QR code won't work for you unless you either happen to be on my local network or also have a Rune Audio setup. The player looks like this on my phone.

Another fun thing I've done is to set up automatically downloaded podcasts on my NAS from specific RSS feeds so I can then play them on the stereo. It's also possible to stream audio (e.g. Rdio) from my computer to the Pi using TuneBlade. For Macs, the airplay protocol is built in so that works pretty seamlessly too.

So that's some stuff I've been getting setup recently. As can be imagined, not everything goes exactly according to plan, and I've had some hiccups with power supplies, wireless signals, etc. It's still very much a work in progress (next up are a 3D-printed case and an IR remote receiver), but I'm going to make an effort to ensure my other projects don't get too stalled.

Later,

Stuart

So that's some stuff I've been getting setup recently. As can be imagined, not everything goes exactly according to plan, and I've had some hiccups with power supplies, wireless signals, etc. It's still very much a work in progress (next up are a 3D-printed case and an IR remote receiver), but I'm going to make an effort to ensure my other projects don't get too stalled.

Later,

Stuart

Friday, October 17, 2014

Hack a USB game controller for browser reading

This is another example of a minor annoyance that snowballed into a time-consuming yet satisfying and elegant solution, all thanks to AutoHotkey (see Better Transcription... and Color Coding...).

I end up reading quite a lot of articles and documents in my web browser as I'm sure you do too. However, I find that I usually end up hunched over with my eyes too close to the screen. My index finger also gets tired from the repetitive scrolling on the mouse wheel. I thought to myself that it would be nice to just have a single button that I could press to scroll the page down while I sat back from the screen a bit. My mouse is wireless but it's awkward to hold it in my lap. I remembered that there are USB foot pedal peripherals that one can buy and program. But then the lightbulb went on!

In my backpack was a knockoff super nintendo USB controller (don't ask me why that was in my backpack, it just was). If I could use the gamepad controller to control my browser, I could sit back and read documents with ease.

A bit of research later, I came across this AutoHotkey script that helped me identify the parameters of the controller to use, such as button numbers. I then modified the script, and with the help of some other useful pages, like this one, I managed to achieve what I was looking for.

Because the default mouse speed was too fast to accurately click buttons on screen, I gave my Right Alt and Right Ctrl keys the function of decreasing and increasing, respectively, the mouse pointer speed. I don't really use those keys for anything else, and they don't execute any other commands when pressed solo, so I thought it made sense. The other thing I didn't show in the video was I mapped the X key to the browser's back button.

You can download the script here and make your own changes however you like.

It isn't totally refined or robust (might take some tweaking to make it work with your own controller), but in its current state, it works how I want it to. One thing I don't like is that pressing the right bumper once is essentially translated into pressing the down arrow key twice. I'd prefer a one-to-one translation for more control and to use with other applications (e.g. did you know using the arrow keys on a google search result page moves between search results?).

Again, the script download link is: http://goo.gl/zGawnD

Later,

Stuart

I end up reading quite a lot of articles and documents in my web browser as I'm sure you do too. However, I find that I usually end up hunched over with my eyes too close to the screen. My index finger also gets tired from the repetitive scrolling on the mouse wheel. I thought to myself that it would be nice to just have a single button that I could press to scroll the page down while I sat back from the screen a bit. My mouse is wireless but it's awkward to hold it in my lap. I remembered that there are USB foot pedal peripherals that one can buy and program. But then the lightbulb went on!

In my backpack was a knockoff super nintendo USB controller (don't ask me why that was in my backpack, it just was). If I could use the gamepad controller to control my browser, I could sit back and read documents with ease.

A bit of research later, I came across this AutoHotkey script that helped me identify the parameters of the controller to use, such as button numbers. I then modified the script, and with the help of some other useful pages, like this one, I managed to achieve what I was looking for.

Because the default mouse speed was too fast to accurately click buttons on screen, I gave my Right Alt and Right Ctrl keys the function of decreasing and increasing, respectively, the mouse pointer speed. I don't really use those keys for anything else, and they don't execute any other commands when pressed solo, so I thought it made sense. The other thing I didn't show in the video was I mapped the X key to the browser's back button.

You can download the script here and make your own changes however you like.

It isn't totally refined or robust (might take some tweaking to make it work with your own controller), but in its current state, it works how I want it to. One thing I don't like is that pressing the right bumper once is essentially translated into pressing the down arrow key twice. I'd prefer a one-to-one translation for more control and to use with other applications (e.g. did you know using the arrow keys on a google search result page moves between search results?).

Again, the script download link is: http://goo.gl/zGawnD

Later,

Stuart

Sunday, August 31, 2014

New development

I am very proud to announce that circa February 2015, I will be a new father! I made this illustration as part of our "facebook announcement", now a 21st century convention it seems.

Sketched on paper and "inked" in Photoshop. The fetus was around 17 weeks at the time (now 18!). Obviously I used image refs of other people and ended up using a range of 15-20 weeks. This wasn't intended to be a very accurate illustration, my self-brief was a fun and appealing "medical illustration".

I've been requested to make the illustration into a series as the fetus grows, but we'll see what happens; I may be too busy with other projects which will be revealed soon.

Later,

Stuart

Sketched on paper and "inked" in Photoshop. The fetus was around 17 weeks at the time (now 18!). Obviously I used image refs of other people and ended up using a range of 15-20 weeks. This wasn't intended to be a very accurate illustration, my self-brief was a fun and appealing "medical illustration".

I've been requested to make the illustration into a series as the fetus grows, but we'll see what happens; I may be too busy with other projects which will be revealed soon.

Later,

Stuart

Friday, July 11, 2014

Contour render test - Ribosome

This is a quick render test of a molecular complex (50S subunit of the 70S ribosome) that I decimated in ZBrush and then added a contour shader to, with a bit of post-processing in Photoshop. It's part of a larger piece that I will hopefully be able to share with you soon.

Later,

Stuart

Later,

Stuart

Monday, June 30, 2014

Saturday, June 21, 2014

Some recent sketches

I thought I would post some sketches I've done recently...

Imaginative organisms:

and real ones:

Later,

Stuart

Imaginative organisms:

and real ones:

Later,

Stuart

Monday, June 16, 2014

Autohotkey Color Coding

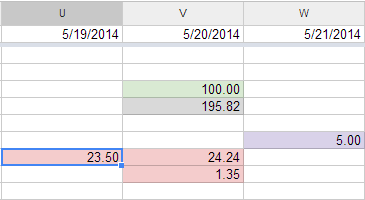

I do some financial tracking in google docs, which is great, but isn't the greatest for hotkeys. Anyway, I was frustrated that it took a few clicks to add color coding to individual cells as I entered data. There is conditional formatting but that didn't suit my purpose because the data isn't consistent for the category.

AutoHotkey to the rescue again! I made a post recently about AutoHotkey and I have to say that it is such a great tool, especially for someone as hotkey obsessed as I am.

AutoHotkey here takes my mouse position, does a couple quick clicks, adding color coding to the selected cell(s), and returns the mouse pointer to where it was originally. It's pretty slick. Of course if you want to utilitize this script yourself, you'll have to change the pixel positions, because it's customized to how the window is sized on my monitor. If you want to take a look at it, it's here for download. I also mapped the awkward Ctrl+Shift+F# keys to some of my G-series macro keys.

Later,

Stuart

AutoHotkey to the rescue again! I made a post recently about AutoHotkey and I have to say that it is such a great tool, especially for someone as hotkey obsessed as I am.

Colors in an instant! Fake numbers here :)

AutoHotkey here takes my mouse position, does a couple quick clicks, adding color coding to the selected cell(s), and returns the mouse pointer to where it was originally. It's pretty slick. Of course if you want to utilitize this script yourself, you'll have to change the pixel positions, because it's customized to how the window is sized on my monitor. If you want to take a look at it, it's here for download. I also mapped the awkward Ctrl+Shift+F# keys to some of my G-series macro keys.

Later,

Stuart

Wednesday, June 11, 2014

Tuesday, June 3, 2014

Bouncy bouncy

I've recently started trying my hand at some "traditional" frame by frame "pencil" animation. That's a lot of quotes, basically because it's in Flash, so nothing analog. It's pretty fun so far. I'm starting into Richard William's Animator's Survival Kit, which I'm really looking forward to.

Later,

Stuart

Later,

Stuart

Tuesday, May 13, 2014

Better transcription with VLC and Notepad++

Yesterday I posted about humorously bad automatic audio transcription. Turns out that's not the answer, so I turned to the manual route for the time being.

The biggest problem is that because I don't have any dedicated transcription software, I am stuck with a very awkward method of alt tabbing between VLC (the media player) and notepad++ (my text editor) along with spacebar (to play and pause) and alt+left and alt+right to skip a few seconds backward and forward. This becomes really clumsy when I don't pause before switching to notepad++ so the audio keeps playing too far, or I forget where I am and start trying to type in the VLC window. Very slow. So I set about remedying this. What follows is my solution and perhaps might help you speed up your transcription too if you can't afford to buy dedicated software. I'm sure there are some other free tools around or more elegant solutions, but I like using Notepad++ and VLC and my solution is working well for me so far. It should work with any text editor, although VLC is necessary for this system.

So if you're following along at home, get VLC and AutoHotkey and Notepad++ [optional] and Windows. Sorry, AutoHotkey is Windows only as far as I know.

I built a little script that would help me out as it's running in the background. Because I have a keyboard (Logitech G710+) with several macro keys, I was able to bind a complex hotkey to a single button that I can reach with my pinky while typing. I chose Ctrl+alt+(a number) because that wasn't bound to anything already in notepad++ and I didn't have to remember it anyway. The huge advantage is that I am able to play, pause, jump back and forward without losing focus in my text editor. I never have to take my hands off the keyboard or touch the VLC window. With the final hotkey, Ctrl+alt+5 bound to G1, I can pull the current timecode from the media file and paste it into my text editor so that I can mark the spot I'm transcribing.

Here are the keys and their functions in the order I can reach them on my keyboard:

G6: Ctrl+alt+8 - Play or pause the VLC media file, without losing focus in the text editor

G5: Ctrl+alt+9 - Jump back 3 seconds

G4: Ctrl+alt+0 - Jump forward 3 seconds

G3: Ctrl+alt+7 - Jump back 10 seconds

G2: Ctrl+alt+6 - Jump forward 10 seconds

G1: Ctrl+alt+5 - Paste the current timecode into the text

You can edit the "jump distances" by tweaking what Alt+left, right, and Ctrl+left, right do in VLC:

You can use the hotkeys even without a macro keyboard; just edit them in the .ahk file to something a little easier, like Ctrl+` or something. Some helpful documentation on that here.

Finally, you can download the script here!

Hope that helps someone, because it's saving me lots of headaches already.

Later,

Stuart

The biggest problem is that because I don't have any dedicated transcription software, I am stuck with a very awkward method of alt tabbing between VLC (the media player) and notepad++ (my text editor) along with spacebar (to play and pause) and alt+left and alt+right to skip a few seconds backward and forward. This becomes really clumsy when I don't pause before switching to notepad++ so the audio keeps playing too far, or I forget where I am and start trying to type in the VLC window. Very slow. So I set about remedying this. What follows is my solution and perhaps might help you speed up your transcription too if you can't afford to buy dedicated software. I'm sure there are some other free tools around or more elegant solutions, but I like using Notepad++ and VLC and my solution is working well for me so far. It should work with any text editor, although VLC is necessary for this system.

So if you're following along at home, get VLC and AutoHotkey and Notepad++ [optional] and Windows. Sorry, AutoHotkey is Windows only as far as I know.

I built a little script that would help me out as it's running in the background. Because I have a keyboard (Logitech G710+) with several macro keys, I was able to bind a complex hotkey to a single button that I can reach with my pinky while typing. I chose Ctrl+alt+(a number) because that wasn't bound to anything already in notepad++ and I didn't have to remember it anyway. The huge advantage is that I am able to play, pause, jump back and forward without losing focus in my text editor. I never have to take my hands off the keyboard or touch the VLC window. With the final hotkey, Ctrl+alt+5 bound to G1, I can pull the current timecode from the media file and paste it into my text editor so that I can mark the spot I'm transcribing.

Here are the keys and their functions in the order I can reach them on my keyboard:

G6: Ctrl+alt+8 - Play or pause the VLC media file, without losing focus in the text editor

G5: Ctrl+alt+9 - Jump back 3 seconds

G4: Ctrl+alt+0 - Jump forward 3 seconds

G3: Ctrl+alt+7 - Jump back 10 seconds

G2: Ctrl+alt+6 - Jump forward 10 seconds

G1: Ctrl+alt+5 - Paste the current timecode into the text

You can edit the "jump distances" by tweaking what Alt+left, right, and Ctrl+left, right do in VLC:

You can use the hotkeys even without a macro keyboard; just edit them in the .ahk file to something a little easier, like Ctrl+` or something. Some helpful documentation on that here.

Finally, you can download the script here!

Hope that helps someone, because it's saving me lots of headaches already.

Later,

Stuart

Monday, May 12, 2014

Bad auto transcription

I ran a focus group today with a few undergraduate students about molecular visualization. I need to transcribe the 45 minute session, so I figured I would maybe try an automatic transcription to do a rough first pass. Adobe Premiere has a system that can do this and even identify individual speakers. You are able to import a script to help it along, because it's designed to sync your script with scenes shot for a movie, but obviously I didn't have that so I just let it go from scratch. The following is some of the output:

[Speaker 45] it's changed this that this lady

[Speaker 18] and a video or go to whoa and Simone hostage to wear this dress

[Speaker 6] just because the slopes the festival he says it is that in ten states it's a certain action yes and fifty years and when I was contagious there

[Speaker 83] it it it it it it it it it it it

[Speaker 103] and cheese over from a new light [ed. note: there were only 7 people in the room]

[Speaker 6] we know what's happening in Sausalito...

[Speaker 16] ... just can't go to the point and click yes instead you should keep in mind that again that abortion is random it is why is this just call him to stick to the orange blossoms many other things...

[Speaker 51] is so so are our first Easter Specter it to others the demotion of business Marquette but I think mom was looking for the orange market well

[Speaker 25] she texted me today secrets in the Rapids

[Speaker 6] still threatened to compete and then one day to make it

[Speaker 18] who is one of the tragedy of errors or the schnapps they know about Ohio...

[Speaker 9] and how their teacher they die will be different in them isn't it close to him

[Speaker 7] just a mechanism Liverpool cables that seemed so far to a mechanism by which locals

[Speaker 25] cancer with the crops

[Speaker 6] lip and yet you can earn you as the cats family when I go we should sell this theory TTC

[Speaker 20] so nice to see change which was nice to be competing for the weekend

[Speaker 7] Satan and his might just mean all those move around

So it was a pretty good discussion; we learned some very interesting things. Needless to say, I'm going to have to start from scratch myself and leave Adobe to work with defined scripts. But I can't help be curious about those secrets in the Rapids...

Later,

Stuart

[Speaker 45] it's changed this that this lady

[Speaker 18] and a video or go to whoa and Simone hostage to wear this dress

[Speaker 6] just because the slopes the festival he says it is that in ten states it's a certain action yes and fifty years and when I was contagious there

[Speaker 83] it it it it it it it it it it it

[Speaker 103] and cheese over from a new light [ed. note: there were only 7 people in the room]

[Speaker 6] we know what's happening in Sausalito...

[Speaker 16] ... just can't go to the point and click yes instead you should keep in mind that again that abortion is random it is why is this just call him to stick to the orange blossoms many other things...

[Speaker 51] is so so are our first Easter Specter it to others the demotion of business Marquette but I think mom was looking for the orange market well

[Speaker 25] she texted me today secrets in the Rapids

[Speaker 6] still threatened to compete and then one day to make it

[Speaker 18] who is one of the tragedy of errors or the schnapps they know about Ohio...

[Speaker 9] and how their teacher they die will be different in them isn't it close to him

[Speaker 7] just a mechanism Liverpool cables that seemed so far to a mechanism by which locals

[Speaker 25] cancer with the crops

[Speaker 6] lip and yet you can earn you as the cats family when I go we should sell this theory TTC

[Speaker 20] so nice to see change which was nice to be competing for the weekend

[Speaker 7] Satan and his might just mean all those move around

So it was a pretty good discussion; we learned some very interesting things. Needless to say, I'm going to have to start from scratch myself and leave Adobe to work with defined scripts. But I can't help be curious about those secrets in the Rapids...

Later,

Stuart

Thursday, May 8, 2014

Adding variation to objects using a single shader

I'm working on a scene with many (potentially hundreds) of objects which are pretty much the same. In fact there are many instances with the same shape node. I wanted some subtle variation in the color of the objects, not over the surface of an object, but on an object to object basis.

After doing some research, I encountered a method described here which uses a triple switch node. I've used shading switch nodes a little bit in Maya before so this seemed feasible. The biggest problem, however, was that the method doesn't work with instanced objects out of the box. So I set out to develop a "better" system that works even with instanced objects and combinations of instanced and non-instanced objects.

The idea is that you create a ramp that is piped into any attribute you want to vary. The ramp shows a range of colors and each object gets a random value which corresponds to a single location on the ramp. If you're not able to get a ramp looking random enough for the extent of variation you want, you can always play with the HSV Color Noise attributes or plug something else into the ramp.

1) The image below shows how to setup the initial shading network. Plug a V ramp into the attribute of choice in your material and then create a Single Switch which gets plugged into the place2Dtexture node (output > vCoord).

Edit Dec 2015: Probably simpler to skip the place2dTexture and plug the singleShadingSwitch.output directly to the vCoord of the ramp.

2) Then assign your material to everything you want to exhibit the variation.

3) Then open the AE for your shading switch and press the Add Surfaces button.

4) Then run this code. Just paste it into the script editor, select it all and "execute" or hit enter on the numpad.

5) Because this is all about visible connections, you can always tweak the ramp or even tweak the random values for individual objects. To do that, adjust the attribute called Rand VCoord to any value from 0–1. The effects probably won't show up in the viewport, but if you hit render the values should appear.

Here are a couple of examples:

I was particularly concerned about instanced objects working with this and so I tested it on my molecular geometry. The first test only gave two different random values because it was the transform node that was itself instance above the coiled coil level of the hierarchy. So I pulled everything out of the hierarchy (not ideal, but works as a quick last step) which resulted in transform1 transform 2... transform 96 each with an instanced transform as a child. In order to put the random attribute on the proper transform, I just added two lines of code that changed the focus from the child to the parent (those are the two commented lines other than the debug print statements). Then I got the middle image, which is a bit extreme, but it works! So I tweaked my ramp a few times and got the image on the right in no time at all.

You can also use this method to add things other than random variation. Instead of a random value, you could assign the distance to the camera (mapped to 0–1 with setRange) or any other per-object transform info.

Later,

Stuart

After doing some research, I encountered a method described here which uses a triple switch node. I've used shading switch nodes a little bit in Maya before so this seemed feasible. The biggest problem, however, was that the method doesn't work with instanced objects out of the box. So I set out to develop a "better" system that works even with instanced objects and combinations of instanced and non-instanced objects.

The idea is that you create a ramp that is piped into any attribute you want to vary. The ramp shows a range of colors and each object gets a random value which corresponds to a single location on the ramp. If you're not able to get a ramp looking random enough for the extent of variation you want, you can always play with the HSV Color Noise attributes or plug something else into the ramp.

1) The image below shows how to setup the initial shading network. Plug a V ramp into the attribute of choice in your material and then create a Single Switch which gets plugged into the place2Dtexture node (output > vCoord).

Edit Dec 2015: Probably simpler to skip the place2dTexture and plug the singleShadingSwitch.output directly to the vCoord of the ramp.

2) Then assign your material to everything you want to exhibit the variation.

3) Then open the AE for your shading switch and press the Add Surfaces button.

4) Then run this code. Just paste it into the script editor, select it all and "execute" or hit enter on the numpad.

string $switch = "singleShadingSwitch1"; //change this name to the switch you want

string $connectedAttr[] = `listConnections -c true -d false -t "shape" $switch`;

//print $connectedAttr; //DEBUG

for ($i = 0; $i < (size($connectedAttr)/2); $i++){

string $obj = $connectedAttr[($i*2)+1];

//string $parent[] = `listRelatives -p $obj`; //get parent transform for instanced transforms

// $obj = $parent[0]; //change object relationship to parent

//print $obj + "\n"; //DEBUG

if (!`attributeExists "randVCoord" $obj`){ //checks if attribute exists

addAttr -at "float" -ln "randVCoord" -keyable true -hidden false -min 0 -max 1 $obj;

}

float $randCoord = rand(0,1);

setAttr ($obj + ".randVCoord") $randCoord; //set random number to custom transform attribute

if (!`isConnected ($obj + ".randVCoord") ($switch + ".input[" + $i + "].inSingle")`){

connectAttr ($obj + ".randVCoord") ($switch + ".input[" + $i + "].inSingle");

}

}

You may have to edit the name of the singleShadingSwitch in the script. I was thinking making a custom input dialog but getting the functionality was difficult enough and I didn't want to delve into the whole GUI aspect.string $connectedAttr[] = `listConnections -c true -d false -t "shape" $switch`;

//print $connectedAttr; //DEBUG

for ($i = 0; $i < (size($connectedAttr)/2); $i++){

string $obj = $connectedAttr[($i*2)+1];

//string $parent[] = `listRelatives -p $obj`; //get parent transform for instanced transforms

// $obj = $parent[0]; //change object relationship to parent

//print $obj + "\n"; //DEBUG

if (!`attributeExists "randVCoord" $obj`){ //checks if attribute exists

addAttr -at "float" -ln "randVCoord" -keyable true -hidden false -min 0 -max 1 $obj;

}

float $randCoord = rand(0,1);

setAttr ($obj + ".randVCoord") $randCoord; //set random number to custom transform attribute

if (!`isConnected ($obj + ".randVCoord") ($switch + ".input[" + $i + "].inSingle")`){

connectAttr ($obj + ".randVCoord") ($switch + ".input[" + $i + "].inSingle");

}

}

5) Because this is all about visible connections, you can always tweak the ramp or even tweak the random values for individual objects. To do that, adjust the attribute called Rand VCoord to any value from 0–1. The effects probably won't show up in the viewport, but if you hit render the values should appear.

Here are a couple of examples:

|

| Red to Blue ramp into the color |

|

| Fractal noise into the bump channel with shader switch controlling amplitude |

You can also use this method to add things other than random variation. Instead of a random value, you could assign the distance to the camera (mapped to 0–1 with setRange) or any other per-object transform info.

Later,

Stuart

Saturday, May 3, 2014

sIBL Render

I spent a bit of time testing out some sIBL and Lightsmith tools that make setting up studio renders pretty straightforward. I still need to decide how this might help with molecular environments, but it might be a place to start. I'm also not sure exactly what the object in the middle is; it's just a cylinder extruded a bunch.

I also got to try my watermark, although it's not embedded in anything 3D.

And in case you are also experimenting with Maya 2015 and wondering where Keep Faces Together is, well now it's in the Preferences under the Modelling tab.

Later,

Stuart

I also got to try my watermark, although it's not embedded in anything 3D.

And in case you are also experimenting with Maya 2015 and wondering where Keep Faces Together is, well now it's in the Preferences under the Modelling tab.

Later,

Stuart

Friday, May 2, 2014

Look at Selection

I just installed Maya 2015 and have been playing around with some of the new features. During the process of perusing through menus I stumbled across the command "Look at Selection" in the View menu of the modelling panel. I'm sure it's a very old feature (I know at least Maya 2008) but I haven't ever used it because it doesn't seem to have a hotkey assigned to it so I doubt many other people use it much and ergo I haven't seen people using it in tutorials etc.

It's a very handy command because it does basically the same thing as "frame selected" or "frame all" so it centers the camera's pivot on what you're working on, but it doesn't zoom the camera in or out so you're claustrophobically close to a selection of vertices. Does this sound familiar? Modeling - Alt+LMB orbit - [oh, I'm tumbling away from where I'm working] - f - Alt+RMB zoom out zoom out zoom out - modeling and repeat. So it's great because the camera keeps its distance, but there's no hotkey for it... yet.

I used the MEL mentioned here as a starting point and modified it so it would work with the Look at Selection command which is

Basically I created a new command in the hotkey editor (which I've assigned the hotkey '?' itself) and then assigned 'Ctrl+f', which wasn't already assigned anything and it's related to the frame hotkey 'f'.

I might end up just remapping 'f' to it if I find I don't use the frame command much anymore. The command is also handy because if there's nothing selected the camera just centers on the origin, instead of zooming way out into space as 'frame' will do in a large scene. Hopefully that's helpful for some people.

It's a very handy command because it does basically the same thing as "frame selected" or "frame all" so it centers the camera's pivot on what you're working on, but it doesn't zoom the camera in or out so you're claustrophobically close to a selection of vertices. Does this sound familiar? Modeling - Alt+LMB orbit - [oh, I'm tumbling away from where I'm working] - f - Alt+RMB zoom out zoom out zoom out - modeling and repeat. So it's great because the camera keeps its distance, but there's no hotkey for it... yet.

I used the MEL mentioned here as a starting point and modified it so it would work with the Look at Selection command which is

viewLookAt [-position linear linear linear] [camera]

so the little MEL script I ended up with is

$currentPanel = `getPanel -withFocus`;

$panelType = `getPanel -to $currentPanel`;

if ($panelType == "modelPanel")

{

string $camera = `modelEditor -q -camera $currentPanel`;

viewLookAt $camera;

}

And then all that was needed was to assign a hotkey to it as described here.$panelType = `getPanel -to $currentPanel`;

if ($panelType == "modelPanel")

{

string $camera = `modelEditor -q -camera $currentPanel`;

viewLookAt $camera;

}

Basically I created a new command in the hotkey editor (which I've assigned the hotkey '?' itself) and then assigned 'Ctrl+f', which wasn't already assigned anything and it's related to the frame hotkey 'f'.

I might end up just remapping 'f' to it if I find I don't use the frame command much anymore. The command is also handy because if there's nothing selected the camera just centers on the origin, instead of zooming way out into space as 'frame' will do in a large scene. Hopefully that's helpful for some people.

Tuesday, April 29, 2014

Creating an embossed watermark

I recently heard a talk about watermarking your images and different ways to add your copyright information without it being easily removed. I'm not the best at that currently, though I typically try to add something that doesn't detract too much from the image. I also don't sell any work currently, so I'm not too terribly fussed about holding everything close yet, although I understand its importance.

But it got me thinking that it would be nice to have a way to embed a little signature in the 3D environment itself. Just like a potter or sculptor can use a little stamp to mark their signature in clay, it would be cool to easily add my own signature to 3D sculpts or rendered scenes.

First I created in illustrator a graphic signature. I was inspired by greek patterns and asian characters. I might play with this idea more, but for now here it is.

I exported from illustrator as a png. I used 512x512 but that might be overkill. Bringing it into Illustrator, I added some space around the edges since the export cropped right to the art. Just a small amount of space is really necessary (not as much as above), because then I blurred the edges a bit, perhaps 1-2 pixel Gaussian blur, which will result in a slight bevel. Then you can use the image to make a stamp in various applications.

ZBrush:

I don't actually have ZBrush installed here, so I can't guarantee these steps will work perfectly.

Invert the image so that the surrounding area is black. I believe by default black has no effect on the surface and white is maximum effect. You can experiment with different sizes and 8-bit and 16-bit images to see what gives the best result. It seems like ZBrush alphas are generally psd files.

Then bring the image into ZBrush as an alpha and apply it with a DragRect brush. Hold ctrl to cut the alpha into the surface instead of bringing it out.

Mudbox:

Similar to ZBrush, export the inverted image and add it to your list of stencils. Choose the imprint sculpt tool and select your stencil. Adjust the position and size of the stencil and then make sure your brush size is larger than the whole stencil. Set the strength to an appropriate amount (m) and paint once over the stencil. You now have a lovely embossed signature.

Maya:

Adding the signature as a displacement map is a little trickier. There are probably many good ways to do this, but the following is how I ended up getting the result I wanted.

First in Photoshop set your background to exactly mid grey and leave the signature area as black. Export as an image, and in Maya create a displacement node and plug your image file into it (file.outAlpha > displacementNode.displacement). Leave the scale as 1 in the displacement node. Plug the displacement node into the shading group of whatever shader is on your object (displacementNode.displacement > myShader1SG.displacementShader).

Check Alpha Is Luminance in the file node. In order to make sure that the middle grey keeps the rest of the surface at the same depth you must correct the alpha values. Create an expression that sets the Alpha Offset to -0.5 times the Alpha Gain (file1.alphaOffset = file1.alphaGain*-0.5;). You can now play with the Alpha Gain to get the right depth (try a value around 0.05). Also make sure the Default Color is black, the Color Gain is white, and the Color Offset is black (It seems weird that the default color would be black while the surrounding color in the texture itself is mid-grey, but it seems to only work if that's the case).

You may have to tweak the place2dTexture Coverage and Translate Frame to place the image in the right position, depending on the UVs. I experimented with adding multiple UV sets to lay out some new UVs to get the texture in the right spot, but unfortunately it seems like displacement maps can't work with alternate UV sets. I suppose you could create a new UV set for your regular textures and use map1 as your "signature" set.

You can also add a separate color for the embossing: just add a new texture or shader and set up a layered texture or shader. Use the outColor from a new black and white copy of the image as the transparency for your regular texture, and might as well use the same place2Dtexture for that B&W image too. You can also add Ambient Occlusion to the mix by creating a surface shader, plugging a mib_amb_occlusion node into the color, and putting the displacement node into the shading group for the AO SS. You will need at least two AO surface shaders, one for everything that doesn't have a displacement map, and one for each object that does. I've included an image of the shading network, to hopefully illustrate what's going on.

Finally, it's a good idea to create a mentalrayDisplacementApproximation with the mental ray Approximation Editor to increase the quality of the displacement. Increase the U and V subdivisions to smooth out the result.

Happy Embossing!

Later,

Stuart

PS I just looked it up and apparently if it's sunk into the surface, it's debossing. Apologies.

But it got me thinking that it would be nice to have a way to embed a little signature in the 3D environment itself. Just like a potter or sculptor can use a little stamp to mark their signature in clay, it would be cool to easily add my own signature to 3D sculpts or rendered scenes.

First I created in illustrator a graphic signature. I was inspired by greek patterns and asian characters. I might play with this idea more, but for now here it is.

I exported from illustrator as a png. I used 512x512 but that might be overkill. Bringing it into Illustrator, I added some space around the edges since the export cropped right to the art. Just a small amount of space is really necessary (not as much as above), because then I blurred the edges a bit, perhaps 1-2 pixel Gaussian blur, which will result in a slight bevel. Then you can use the image to make a stamp in various applications.

ZBrush:

I don't actually have ZBrush installed here, so I can't guarantee these steps will work perfectly.

Invert the image so that the surrounding area is black. I believe by default black has no effect on the surface and white is maximum effect. You can experiment with different sizes and 8-bit and 16-bit images to see what gives the best result. It seems like ZBrush alphas are generally psd files.

Then bring the image into ZBrush as an alpha and apply it with a DragRect brush. Hold ctrl to cut the alpha into the surface instead of bringing it out.

Mudbox:

Similar to ZBrush, export the inverted image and add it to your list of stencils. Choose the imprint sculpt tool and select your stencil. Adjust the position and size of the stencil and then make sure your brush size is larger than the whole stencil. Set the strength to an appropriate amount (m) and paint once over the stencil. You now have a lovely embossed signature.

Maya:

Adding the signature as a displacement map is a little trickier. There are probably many good ways to do this, but the following is how I ended up getting the result I wanted.

First in Photoshop set your background to exactly mid grey and leave the signature area as black. Export as an image, and in Maya create a displacement node and plug your image file into it (file.outAlpha > displacementNode.displacement). Leave the scale as 1 in the displacement node. Plug the displacement node into the shading group of whatever shader is on your object (displacementNode.displacement > myShader1SG.displacementShader).

Check Alpha Is Luminance in the file node. In order to make sure that the middle grey keeps the rest of the surface at the same depth you must correct the alpha values. Create an expression that sets the Alpha Offset to -0.5 times the Alpha Gain (file1.alphaOffset = file1.alphaGain*-0.5;). You can now play with the Alpha Gain to get the right depth (try a value around 0.05). Also make sure the Default Color is black, the Color Gain is white, and the Color Offset is black (It seems weird that the default color would be black while the surrounding color in the texture itself is mid-grey, but it seems to only work if that's the case).

You may have to tweak the place2dTexture Coverage and Translate Frame to place the image in the right position, depending on the UVs. I experimented with adding multiple UV sets to lay out some new UVs to get the texture in the right spot, but unfortunately it seems like displacement maps can't work with alternate UV sets. I suppose you could create a new UV set for your regular textures and use map1 as your "signature" set.

You can also add a separate color for the embossing: just add a new texture or shader and set up a layered texture or shader. Use the outColor from a new black and white copy of the image as the transparency for your regular texture, and might as well use the same place2Dtexture for that B&W image too. You can also add Ambient Occlusion to the mix by creating a surface shader, plugging a mib_amb_occlusion node into the color, and putting the displacement node into the shading group for the AO SS. You will need at least two AO surface shaders, one for everything that doesn't have a displacement map, and one for each object that does. I've included an image of the shading network, to hopefully illustrate what's going on.

Finally, it's a good idea to create a mentalrayDisplacementApproximation with the mental ray Approximation Editor to increase the quality of the displacement. Increase the U and V subdivisions to smooth out the result.

Happy Embossing!

Later,

Stuart

PS I just looked it up and apparently if it's sunk into the surface, it's debossing. Apologies.

Monday, April 28, 2014

Random Molecular Motion

Here are a few visualizations of a random molecular walk and binding event that we showed to some undergraduate science students. They were pretty clever about understanding and interpreting the motion, but it's still difficult to tell whether they are able to translate that to the rest of their understanding of molecular biology. Unfortunately because of the high complexity and speed, the vimeo compression does a poor job of displaying the motion even in HD. Apologies for this. I contacted the vimeo staff and they are not able to push the compression settings as far as I'd like because delivery apparently slows down.

Later,

Stuart

Later,

Stuart

Monday, April 7, 2014

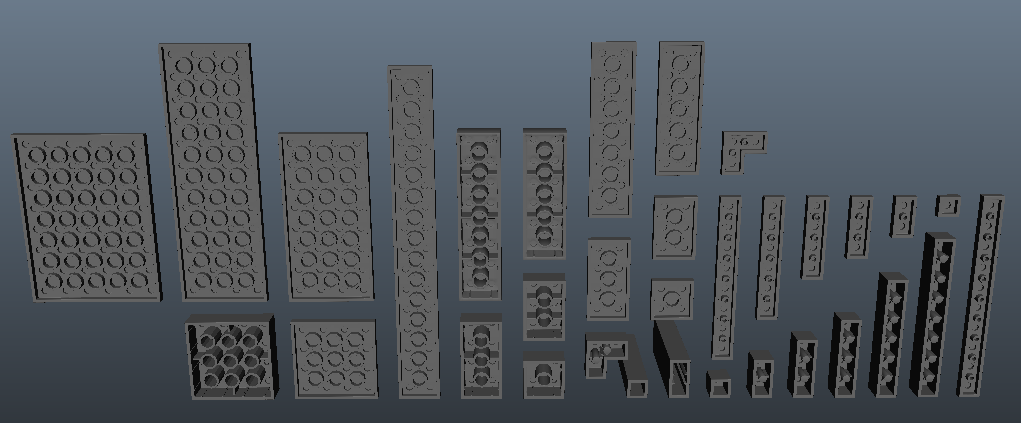

More bricks and plates

A bit more work on some more basic lego pieces:

Not too much interesting stuff, but still having fun.

Later,

Stuart

Not too much interesting stuff, but still having fun.

Later,

Stuart

Sunday, March 16, 2014

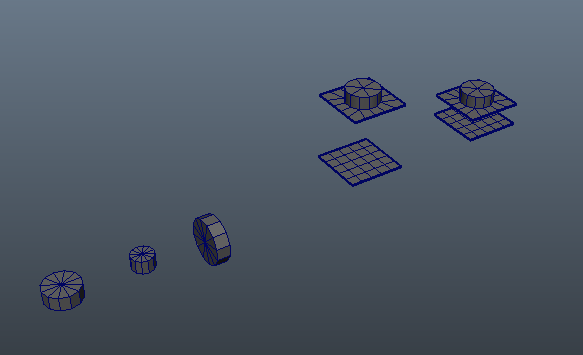

Top and bottoms

More standard bricks. It's interesting that the tops are pretty standard but the bottoms actually vary a fair bit.

I got the lego logo working, but I want to get the bump rendering in the AO pass and that's not quite working yet, so next time I'll have that.

I got the lego logo working, but I want to get the bump rendering in the AO pass and that's not quite working yet, so next time I'll have that.

The font looks really narrow here, but if you examine the studs, the logo isn't the same balloony letters you see regularly.

Thursday, March 6, 2014

Your standard Lego brick

Spent far too long on a single brick. Standard bread and butter 4x2 brick. But I figure if I get this one just right, I can create many more combinations very very quickly. We'll see how that turns out. I'm still wrestling with the beveling that Maya has decided to do. Well, I did the beveling, but Maya did a crappy job of placing the vertices and edges. And faces. Feeling very particular about this.

I'm doing a lot more detail than I did with my original Lego project, and it's already getting fun. Need to put the lego logo as a bump or disp map on the studs.

Later,

Stuart

I'm doing a lot more detail than I did with my original Lego project, and it's already getting fun. Need to put the lego logo as a bump or disp map on the studs.

Later,

Stuart

Monday, February 24, 2014

Lego again

Well, I've been wanting to do more of this for years now, and no better time than the present.

Going to be doing a bunch of this, hopefully. I'll start with this to build up my brick library :)

And my old brick measuring specs:

Later,

Stuart

PS Is it obvious that I just watched the Lego Movie? Go see it if lego has any place in your heart.

Going to be doing a bunch of this, hopefully. I'll start with this to build up my brick library :)

And my old brick measuring specs:

Later,

Stuart

PS Is it obvious that I just watched the Lego Movie? Go see it if lego has any place in your heart.

Subscribe to:

Posts (Atom)